Web Scraping 101

web scraping to collect data

campfire in the Poconos

For the second project at Metis, we were asked to build a multivariate linear regression model using data that we scraped from the web. In class we learned to use BeautifulSoup, a Python HTML parsing library. I was also introduced to Scrapy by one of our TAs, and decided to try it out for this project. I found that Scrapy had a slightly steeper learning curve than BeautifulSoup, but once I got the hang of it, I had a ton of fun writing Spiders to crawl all over some websites to collect the data I needed for my project.

Scrapy is a free and open source web scraping/crawling framework written in Python. For this post I wanted to list some of the tricks I came across to make life a little easier for a beginner using Scrapy. If you haven’t used Scrapy before check out the tutorial first. It will walk you through the basics.

Scrapy Tips for Beginners to get Started:

robots.txt

This isn’t actually a Scrapy tip but a web scraping tip in general. Always make sure to check the robots.txt file of a website (This is usually found at http://website.com/robots.txt). We want to be respectful and not harm the websites we scrape. This text file with tell you if and how you should proceed.

Scrapy Selector

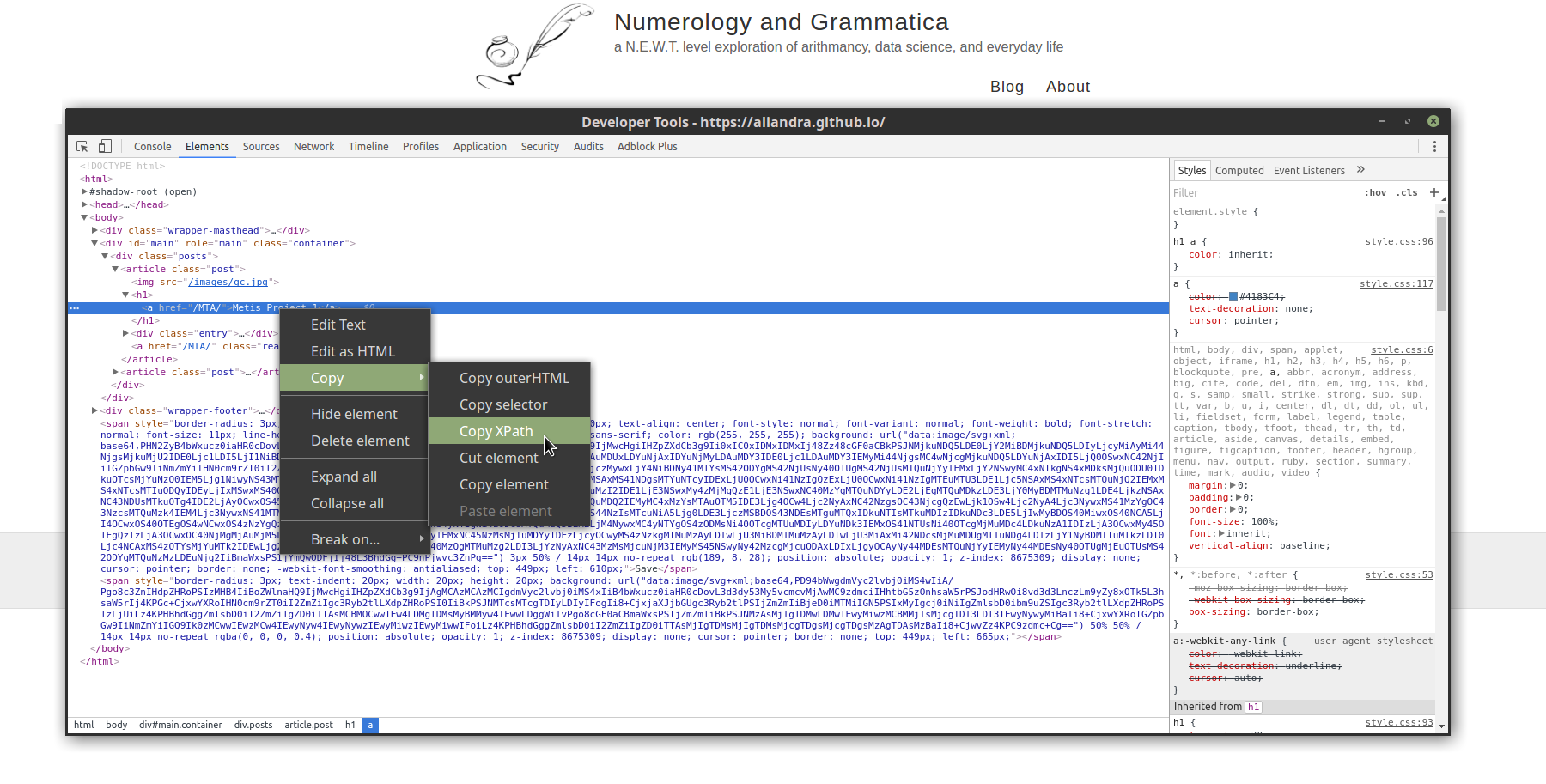

To select elements on a page, we have a choice of CSS or XPath selectors. I suggest learning to use the XPath selector since it allows you to do more than just navigating the structure of a page. With XPath you can better target elements on a page, and it also allows for more flexibility. In fact, Scrapy uses XPath under the hood and CSS selectors are converted to XPath. When you inspect a webpage and come face to face with extremely convoluted HTML (it happens more than you think!) you’re going to want XPath. If you’re using Chrome, an easy way to get the XPath of an element is to right click the data you want and navigate to Copy » Copy XPath.

Scrapy Shell

The Scrapy Shell is a command line tool and an excellent way to test out the code you’ve written. Simply run

scrapy shell 'http://website_to_scrape.com/page1'

I use the Scrapy Shell to test my code and make sure what I wrote is returning the data I want. If not, it’s easy to make some changes or try out a few different things until you get it correct. If I’m using the same code to scrape multiple pages, I will test it on a few of the pages to see if items are the same from page to page of if I need to make a few tweaks here and there.

Custom Settings

We can customize the behavior of our spiders by setting their ‘custom_settings’ attribute. I used the following to cache and throttle my requests so that I don’t hit the servers too hard. We want to be good web citizens and avoid getting our IP banned.

class MySpider(scrapy.Spider):

name = 'myspider'

custom_settings = {

"DOWNLOAD_DELAY": 1.5,

"CONCURRENT_REQUESTS_PER_DOMAIN": 3,

"HTTPCACHE_ENABLED": True

}

- Download Delay: Set the amount of time (in secs) that the downloader should wait before downloading consecutive pages from the same website.

- Concurrent requests: Set the maximum simultaneous requests to a single domain.

- HTTP Cache: enable HTTP cache. This has saved me a few times when I needed to re-scrape the same page.

Hopefully these tips will help other Scrapy beginners save some time. Happy Scraping!